I’m in Barcelona for VMworld as the 2018 tech conference winds down, but I’m eager to attend Supercomputing next week in Dallas. This conference has been around since 1988, and about 11,000 people attend. It is billed as the international conference for HPC (High Performance Computing), networking, storage, and analysis. That sounds like what most of us do for a living doesn’t it?

Defining supercomputing

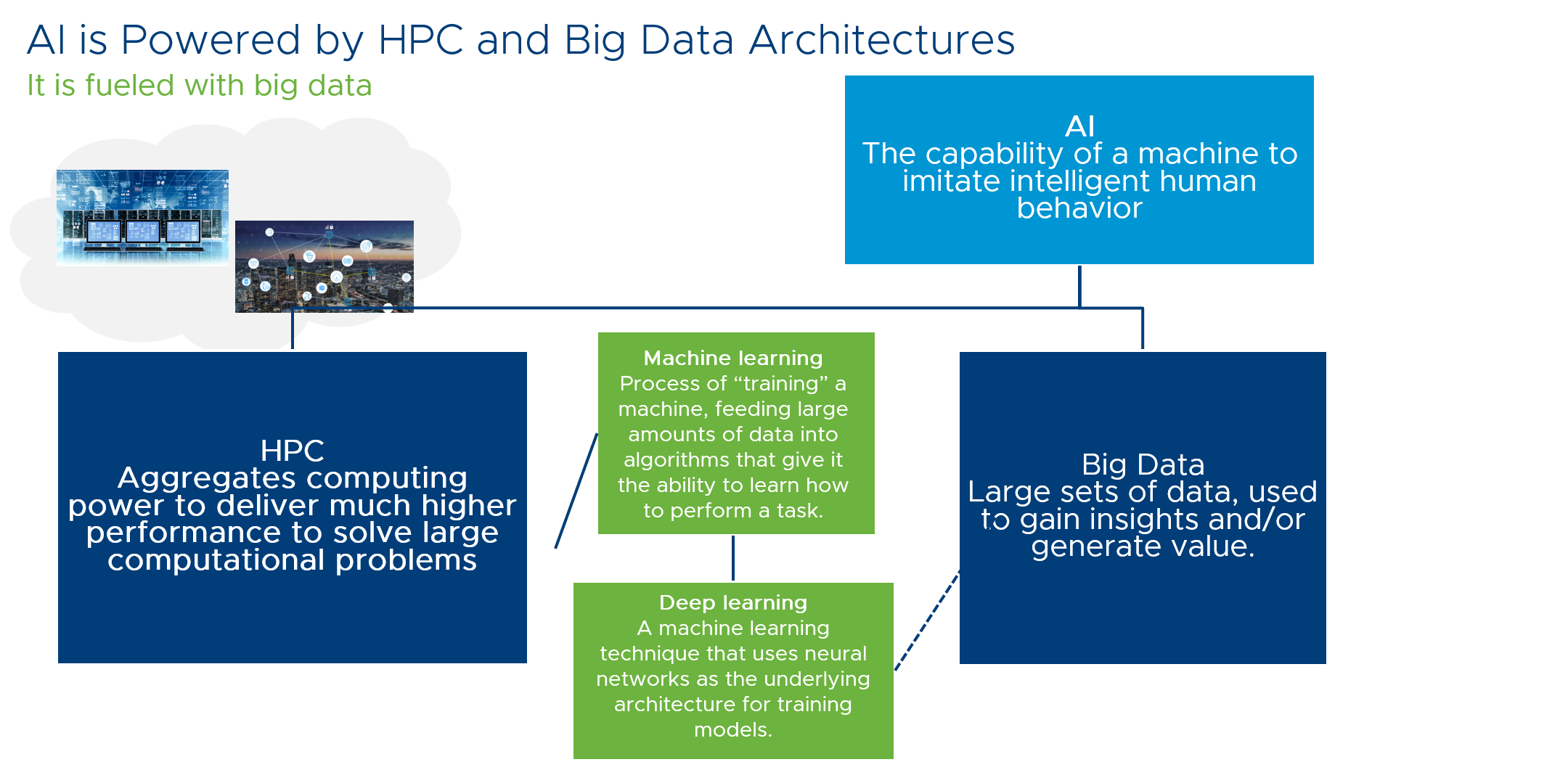

The dictionary definition for a supercomputer is “a large very fast mainframe used especially for scientific computations“. But HPC architectures don’t typically include mainframes. Supercomputing in the context of HPC can be described as “aggregating computing power to deliver much higher performance to solve large computational problems“. Machine Learning (ML) and Deep Learning (DL) are derivative HPC architectures that are designed to feed large amounts of data to algorithms to train them the to perform tasks, sometimes using deep neural networks as the underlying architecture.

Familiar topics at Supercomputing

These architectures are traditionally bare metal with powerful GPUs and super fast interconnects like FPGAs to increase performance, so a supercomputing architecture is definitely built with the building blocks we use for enterprise applications. If you look at some of the tutorial classes that are being offered, it sounds a lot like what we’re talking about in Barcelona, with a scientific application twist: Container Computing for HPC and Scientific Workflows, InfiniBand, Omni-Path, and High-Speed Ethernet: Advanced Features, Challenges in Designing HEC Systems, and Usage, Productive Parallel Programming for FPGA with High-Level Synthesis, Containers, Collaboration, and Community: Hands-On Building a Data Science Environment for Users and Admins.

This is the second Supercomputing conference I’ve attended. Last year, I realized that I had supported supercomputing as a sysadmin at the Smithsonian Computational Facility at Harvard. It was the only job where I didn’t understand the jobs I was supporting, but at SC17 one of the keynotes explained radio astronomy and I finally got it.

Even supercomputing workloads will virtualize!

I’ll be there with the VMware team that supports virtualizing these environments. As a technologist, this has been a really interesting journey for me, I’ve gone from building architectures for applications I couldn’t understand, to being able to talk about all of the benefits of virtualizing these environments to people new to VMware. It is awesome to describe vMotion to someone who has never seen it before!

Back to the current week – the team is also at VMworld talking about virtualizing these workloads. I have a session on Thursday morning at 8 AM [VAP2340BE] that is designed to define terms and give virtual admins and architects the vocabulary to have a conversation with the data scientists who may be looking for your help to increase performance and manageability of supercomputing workloads.

If you’re at Supercomputing, please find us in the Dell Technologies booth. Would love to see you there.

Well I think Cloud will dominate all It branches in few next years.