Enterprise administrators often feel blamed for performance issues that they don’t have any visibility into. While these virtual environments are constantly monitored to deliver strong performance for end users, the way they are typically viewed can leave us assuming that individual user performance is okay when it may not be. When monitoring a Citrix environment, typically your storage, network, and other infrastructure are monitored separately. Even when working in one monitoring solution this only captures the overall infrastructure performance level. Fortunately, there are now tools you can use to have full visibility into the end-user experience, and even scorecards to benchmark your performance against industry standards. In this post, we’ll look at some strategies for tracking user performance and benchmarks, dive into a real-world scenario in which user performance discovery was a challenge, and then wrap things up.

Performance Benchmark Challenges

In my experience, enterprises often limit the budget on monitoring tools and try to use native options to monitor their virtual environments, which can leave the enterprise administrator scrambling to cobble together an answer to a performance problem when a user calls in for support. Enterprises often have multiple monitoring tools, and in many cases need to manually look through multiple logs and performance counters to come up with an answer. Recalling a time when I was working at a very large healthcare organization, we were limited because the person in charge of our EHR on Citrix deployment didn’t include budget for monitoring tools. Even after request, it was deemed there wasn’t going to be any additional funding provided, so an approach that involved PowerShell scripting for service availability checking, reviewing event logs, and analyzing the Citrix console and EHR application console became part of the routine for the person on-call very early every day. In time this proved to be very inefficient and didn’t provide any true answers around performance when there were end-user problems reported, only availability. The reality is that just because the application is online doesn’t mean the performance is where it should be.

End-user performance is experience, right?

So far our primary focus has been around performance of the application and strictly based upon how the back-end system is functioning. Thinking about this more, does performance of a server guarantee that the end-user experience is where it needs to be? The experience is what the end user is telling you, and the performance is what is perceived by the administrator based upon looking at individual IT component-based element metrics such as on CPU, memory, networking, and number of users connected to the server. There is a perception that if these numbers look okay to the administrator that the user doesn’t have an experience problem. This, however, is also likely not the case either. So, if we go back to my experience as an administrator of an EHR application, and a budget that wouldn’t allow for proper monitoring of the environment, end-user experience never really could be fully qualified and left us as a team chasing down performance problems based upon user experiences that we most likely were not even going to be able to resolve permanently.

Benchmark End-User Experience Scorecard

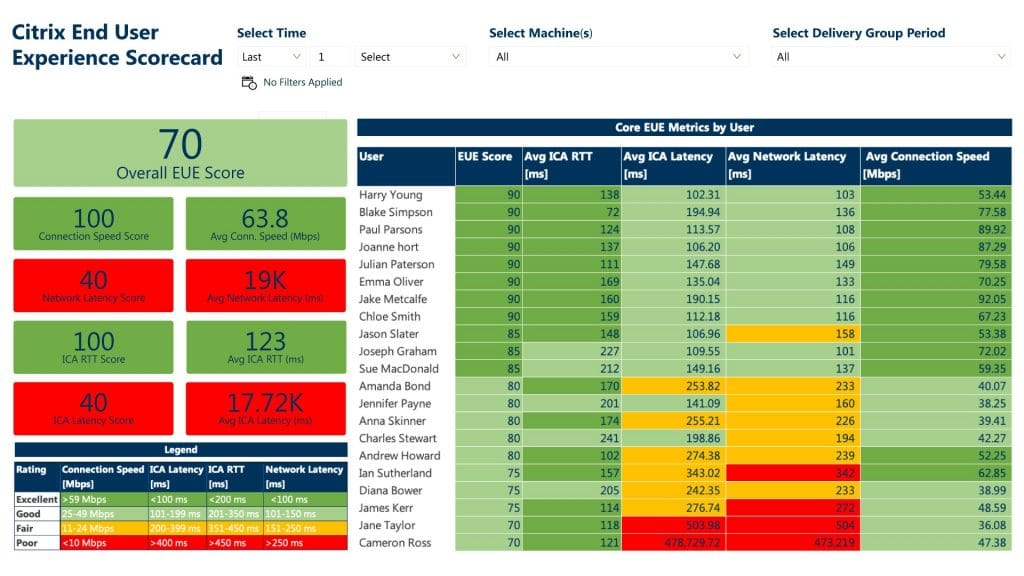

Without a proper toolset that can truly analyze end-user experience, end-user performance can only be inferred and is only as good as a guess. In my experience the native toolsets will only take you so far. For example, with the healthcare organization running EHR on Citrix we used the native tools, PowerShell scripting, and windows server logs to ultimately confirm that the servers had some IT element resource capacity and that the servers were online. This doesn’t provide data on end-user experience or ensure that data will be retained for any period of time. The other challenge with this approach was that in order for anyone to troubleshoot the environment they required permissions to Citrix, and an education around the EHR application and Citrix which also limited who could interpret the dataset. All of the steps weren’t leading to successful business outcomes. With the proper toolset, my team could have had insights into end-user experience through the Goliath Citrix End-User Experience Scorecard. Troubleshooting would have still been required, the but the answer would have been easier to find. The End-User Experience Scorecard also provides IT with objective data to report on to management with definitive proof of end-user experience forensics. See an example of the Scorecard below.

Concluding thoughts

Upon review it’s clear that cobbling together a monitoring process with native toolsets is not advantageous for being able to determine true Citrix user experience. Implementing a wholistic solution developed with the end-user experience in mind can ensure the best possible outcomes for your Citrix users and the business in the best possible way.

Sponsored by Goliath Technologies

![]() Goliath Technologies offers end-user experience monitoring and troubleshooting software, with embedded intelligence and automation, that enables IT pros to anticipate, troubleshoot, and document performance issues regardless of where workloads, applications, or users are located. By doing so, Goliath helps IT break out of reactive mode and into proactive mode. Customers include Universal Health Services, Ascension, CommonSpirit, Penn National Insurance, American Airlines, Office Depot, Tech Mahindra, Pacific Life, Xerox, HCL, and others. Learn more about how we empower proactive IT at goliathtechnologies.com.

Goliath Technologies offers end-user experience monitoring and troubleshooting software, with embedded intelligence and automation, that enables IT pros to anticipate, troubleshoot, and document performance issues regardless of where workloads, applications, or users are located. By doing so, Goliath helps IT break out of reactive mode and into proactive mode. Customers include Universal Health Services, Ascension, CommonSpirit, Penn National Insurance, American Airlines, Office Depot, Tech Mahindra, Pacific Life, Xerox, HCL, and others. Learn more about how we empower proactive IT at goliathtechnologies.com.

“When end users are complaining of poor performance and blaming Citrix, we use Goliath to show where the issues are actually coming from. Usually, we can find that a bad internet connection is the real culprit.” - John Gorena, Architect of the Capital

Learn how you can use objective data to benchmark end-user experience against industry standards.