As an industry, we have years of experience virtualization applications, but can we virtualize deep learning? In my last post, I mentioned HPC applications are architected in several ways. But how can we architect virtual environments if we don’t understand the applications we’ll run on them? In this post, I’ll describe deep learning applications, and discuss how these architectures can be virtualized.

Defining the terms

First, let’s define terms:

- HPC: High performance computing. See my last post for a detailed definition.

- GPU: Graphics Processing Unit. I like this Tech Target definition. This video is a great overall history of GPUs, but I’ve started it for you around 2006, when GP-GPUs (general purpose GPUs) were introduced. That’s when GPUs get interesting for HPC applications like deep learning.

- Deep Learning: The definition in the VMware VROOM blog is great: “Machine learning is an exciting area of technology that allows computers to behave without being explicitly programmed, that is, in the way a person might learn.”

Deep learning is done over neural networks. Neural networks are a subset of algorithms built around a model of artificial neurons spread across three or more layers. A neuron is:- A set of input values (xi) and associated weights (wi)

- A function (g) that sums the weights and maps the results to an output (y).

- Neurons are organized into layers:

- Input layer, which are not neurons. The input layer simply contains values in a data set.

- Hidden layer (there may be several), this is where the processing of the input layer by the neural network happens.

- Output layer is the results of the learning.

What kind of problems are solved by deep learning?

This NVIDIA article explains that deep learning is a technique to accomplish machine learning. Machine learning is an approach to achieve AI (artificial intelligence). What kind of problems does deep learning solve?

- Identifying text in one language and translating into another.

- Determining if your house is a good candidate for solar panels.

- Self-driving cars

- Smart assistants (like Siri)

- Predicting earthquakes

- Longer lists of problems solved by deep learning can be found here and here.

How does deep learning work?

Deep learning is simply an algorithm. The neural network is “trained”, with known values for the input data. The data you train with is known as a training set. The training set helps the neural network understand how to weigh different features in the hidden layers. This training produces metadata (coefficients) contained in vectors, one for each layer in the network. There is also a test set, that is run through the neural network after the network has been trained, to determine if any more tweaking needs to be done.

Once the network is trained, it can be shown a set of inputs it hasn’t seen before, and then make predictions based it’s training.

The decisions and predictions the neural network makes as inputs pass through the neural network are really just the result of complex, matrix multiplication problems. Check out this post for a detailed explanation of the math.

How do GPUs help deep learning happen?

This article describes what all of those math operations mean as far as architectural requirements:

VGG16 (a convolutional neural network of 16 hidden layers which is frequently used in deep learning applications) has ~140 million parameters; aka weights and biases. Now think of all the matrix multiplications you would have to do to pass just one input to this network! It would take years to train this kind of systems if we take traditional approaches.

If your network is just trained to recognize cats on the internet, it may be ok if it takes a long time to process the data. But if you are trying to recognize cancer cells, or make sure your self-driving car navigates through tricky traffic situations, you’re going to want to get to your answers very quickly. The way to do these operations faster is to do them at once, and that is where the power of a GPU comes in. Can the GPU be virtualized, so that you can virtualize deep learning?

How do you virtualize deep learning environments?

You can virtualize the GPUs that help deep learning happen. There are two methods if you use VMware vSphere and NVIDIA GPUs:

- DirectPath I/O passthru mode

- NVIDIA GRID vGPU mode

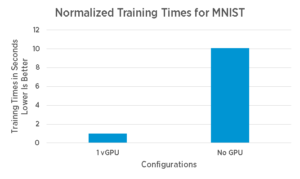

Check out this excellent blog post for complete details on both methods, along with performance data that shows virtualizing GPUs help teach your neural networks faster. This image shows how much faster training times are with a vGPU vs no GPU for demo model in the TensorFlow library (an open source library for machine intelligence) using the MNIST dataset.

Even though these may be new types of applications, there is plenty of content online to help you understand this new world.

These are some of the articles I used to write this post. If you want to learn more about how to virtualize deep learning or other HPC apps, just start reading!

Update: I received feedback on my description of how neural networks work, and I updated my post for accuracy.